As a young woman with a smartphone and multiple social media accounts, I’ve always thought very deeply about what images I’m posting and who I share them with. I’m not alone; the fear of revenge porn is, sadly, one that young people have become accustomed to.

Recently though, I’ve become worried about the idea of my face being overlaid in a pornographic video. Why? Because 96% of deepfakes are pornographic. This doesn’t just happen to celebrities; it also happens to ordinary women outside of the public eye.

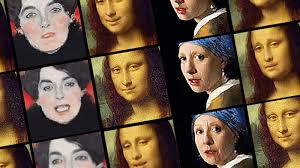

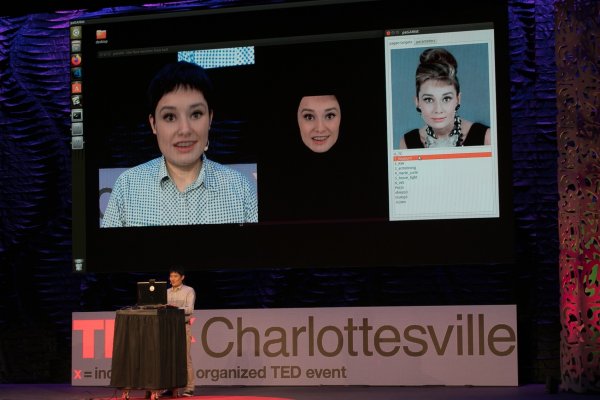

Deepfakes are manipulations of human images which are superimposed onto footage using artificial intelligence-based software. Victims of deepfake pornographic attacks include actors Gal Godot, Emma Watson, Scarlett Johansson, and Indian investigative journalist Rana Ayyub.

To make matters worse, technological advancements are making deepfakes far more difficult to recognise.

Not all deepfakes are pornographic – far from it – but their potential to threaten the privacy and security of women is profound. Indeed, the case of US politician Katie Hill, who was forced to resign after sexually explicit images of her were released to the media, is noteworthy.

Deepfakes, like revenge porn, can be used to demean and silence women and ruin their careers or reputations.

Deepfakes highlight a lack of respect for women, their sexuality, and their bodies. Deepfakes, like revenge porn, can be used to demean and silence women and ruin their careers or reputations. It is a very modern form of sexual violence.

As Forbes magazine wrote: “In addition to violating the victim’s rights to her own images and privacy, a major concern is deepfakes’ potential impact on revenge porn. As we saw in the case with Representative Katie Hill, revenge porn can end someone’s political career. With technologies like deepfakes, revenge porn or cyber smear campaigns can take on a whole new dimension and potentially impact a wider population of victims”

It is already abundantly clear that the anonymity of communicating online helps to fuel the harassment and abuse of women in explicit ways. The internet is not a safe space for women and deepfakes only add to this problem.

It is already abundantly clear that the anonymity of communicating online helps to fuel the harassment and abuse of women in explicit ways. The internet is not a safe space for women and deepfakes only add to this problem.

Pornographic deepfakes aren’t just harmful to the women who are the intended targets. The videos that provide the basis for deepfakes are often taken from pornographic content without the consent of actors or directors. Their bodies are being used in contexts they haven’t agreed to, and definitely aren’t comfortable with. Sex workers whose content has been abused for the creation of deepfakes appear complicit in the harassment of these women.

As a recent article in Wired explained: “Instead of merely worrying that your own image will be distributed in a way that feels off-brand or even offensive, performers must now consider the possibility that their bodies will be cut up into parts and reassembled, Frankenstein-style, into a video intended to harass and humiliate someone who never consented to be sexualised in this manner.”

We see a strange dichotomy where women’s bodies are both weaponised and victimised within the same space. The consistent factor? That both of the parties have lost their autonomy and consent over their own bodies.

So we see a strange dichotomy where women’s bodies are both weaponised and victimised within the same space. The consistent factor? That both of the parties have lost their autonomy and consent over their own bodies.

Obviously, there are some major ethical and legal debates to be had about deepfakes. Is permission always required before someone’s image is used? I have previously written about how the Dali Museum in Florida used deepfake technology so visitors could interact with the deceased artist Salvidor Dail. Is this an invasion of privacy or a violation of consent? Or should we focus more on the intent behind the creation of the deepfake? While these are difficult questions to answer, if we understand the creation of pornographic deepfakes as sexual abuse, we can view them within a broader legal framework.

In Australia, revenge porn or image-based sexual abuse (IBSA) is a Federal criminal offence under the Enhancing Online Safety (Non-consensual Sharing of Intimate Images) Act, and all states and territories (except Tasmania) have made it a criminal offence. This is a positive development and provides some legal basis from which deepfakes can be included in definitions of IBSA.

The difficulty with pornographic deepfakes, however, is the ability to obscure identity. You don’t need a nude picture of your target; you only need their face, which can be taken off any social media account (including LinkedIn) or from a quick Google search. This makes tracing where the video originated from a tricky task. Furthermore, these videos can be spread across vast areas of the internet, making their ultimate destruction or erasure almost impossible. While the existing legislature provides a solid foundation, it is not fully equipped to deal with deepfakes.

The difficulty with pornographic deepfakes, however, is the ability to obscure identity. You don’t need a nude picture of your target; you only need their face, which can be taken off any social media account (including LinkedIn) or from a quick Google search. This makes tracing where the video originated from a tricky task. Furthermore, these videos can be spread across vast areas of the internet, making their ultimate destruction or erasure almost impossible. While the existing legislature provides a solid foundation, it is not fully equipped to deal with deepfakes.

As someone who is passionate about cyber security, I’ll be the first to admit that advancing technology presents exciting opportunities. However, we need to acknowledge that it also creates instability not only through cyber attacks, but by reinforcing dangerous stereotypes of how women are viewed within society as well as enabling online abuse.

We need to move past advising women to ‘be careful’ with what they share online, and acknowledge the creation of deepfake pornography for what it is: sexual violence. Until then, the humiliation and silencing of women online will continue.